Can you use assert outside of unit tests?

Assertions are pieces of code that ensure a certain expectation of the developer holds at that position in the control flow. If the expectation is violated, the program is terminated and a bit of (hopefully) helpful context is printed. If you’re working from a decent IDE, it may even “catch” the assertion and offer a debug session to be started to inspect the situation and variables at the time of the failure.

History

You probably encounter assert more frequently when working in unit test code. Unit testing frameworks have adapted the concept of assert and enhanced it with many useful variants/helpers, including:

- Assert.AreEqual

- clojure.test/is

- unittest.TestCase.assertNotIn

- Assert.That

- EXPECT_NEAR

- rackunit/check-match

- pytest.raises

Pytest even takes charge of Python’s assert statement. But ignoring that hijacking, Python has its own assert statement and these assertions, which don’t come from unit testing suites but are part of a language, are the focus here.

Looking at the pervasive use of the word “assert” in unit tests, the concept is quite similar. Like an assertion, a unit test is an expression of a developer’s expectation of what is supposed to happen. However, unit tests are usually more involved, may take (a little) longer and might require setup or tear-down code. An additional constraint of the assertions listed above is that they have a dedicated habitat and should exclusively be found in your unit testing code.

But unit tests did not invent assert. The latter found their way into source code in the mid-60s as the Algol W Language Description corroborates. The most prominent incarnation probably is the macro assert from C’s standard library from 1978. Kent Beck popularized unit testing for software development more than a decade later. So, that’s a sound “yes” to the opening question; otherwise assert would have felt awfully useless for 22 years.

Assertion Resistance

In code reviews, I sometimes received comments like this, also from seniors:

No assertions in production code.

The first time I was baffled. I defended my stance for that case and merged after we had settled the dispute. But it kept happening and I kept having to justify single asserts. In companies I worked before, we had quite a lot of assertions in our production code and I had never been confronted with that view before.

Clearing misconceptions

One common “warning” was that assertions are dropped for release builds. Yes, that is the default, but we must make an important distinction: asserting is not exception handling! Those are two different tools for different jobs. Assertions are for the developer and the developer only. If they fail, the code has a bug! There is nothing a user of the software could do.

So, we should want assertions to fail for the developer, and suitably most of the time we write, test and debug our software in debug mode. That is also when you can still do something about it (because remember, failing one indicates a bug).

Besides, nobody forbids leaving assertions on for release mode. With ever-faster hardware, some companies are switching to leaving them in. John Reger [1] cites valgrind as a well-known tool that profits from always-on assertions.

After all, it might be better to fail ungracefully than to continue with an unknown/corrupted state—Erlang anyone? There is one important caveat, however: never assert on something with side effects because your code will behave differently depending on whether assertions are left in or not, even if none of them ever fires.

What to use an assert for

For things such as input validation, network communication and other aspects that are not under your control, use exceptions and stay away from assert.

But when you at some point want to make sure that you did not mess up transitions of your state machine, that is a job for an assert. In general, checking invariants is the poster child for using assertions. Not only do they alert you when you messed up rewriting something, but they also serve as documentation that cannot get out of date.

So instead of a passive and inconspicuous

# We should never get here.

put a

assert False, "Did you introduce a new state and forgot to handle it here?"

and feel the relief of crossing that plot of your worry list.

Don’t take “state machine” too literally here. You can also use it three function calls deep to assert on something that the outer-most function should have already established (e.g. assert len(self.queue) > 1).

Overview

To summarize let’s contrast a few things from both techniques.

|

|

ASSERTION |

EXCEPTION HANDLING |

|

context |

things under your control |

expected but also unforeseeable failures |

|

context |

must not happen |

contingency we might have to deal with |

|

think |

“Better safe than sorry.” |

“How can this go wrong and can I do something about it?” |

|

integration |

quiescent almost always |

part of your code flow/logic |

|

for whom |

developer |

developer/user |

|

complexity |

keep cheap |

can become complex if need be |

|

switchable |

may be dropped in release mode |

always there |

|

effect |

in your face ⇒ get to programming |

catchable/suppressible |

Assertions in the field

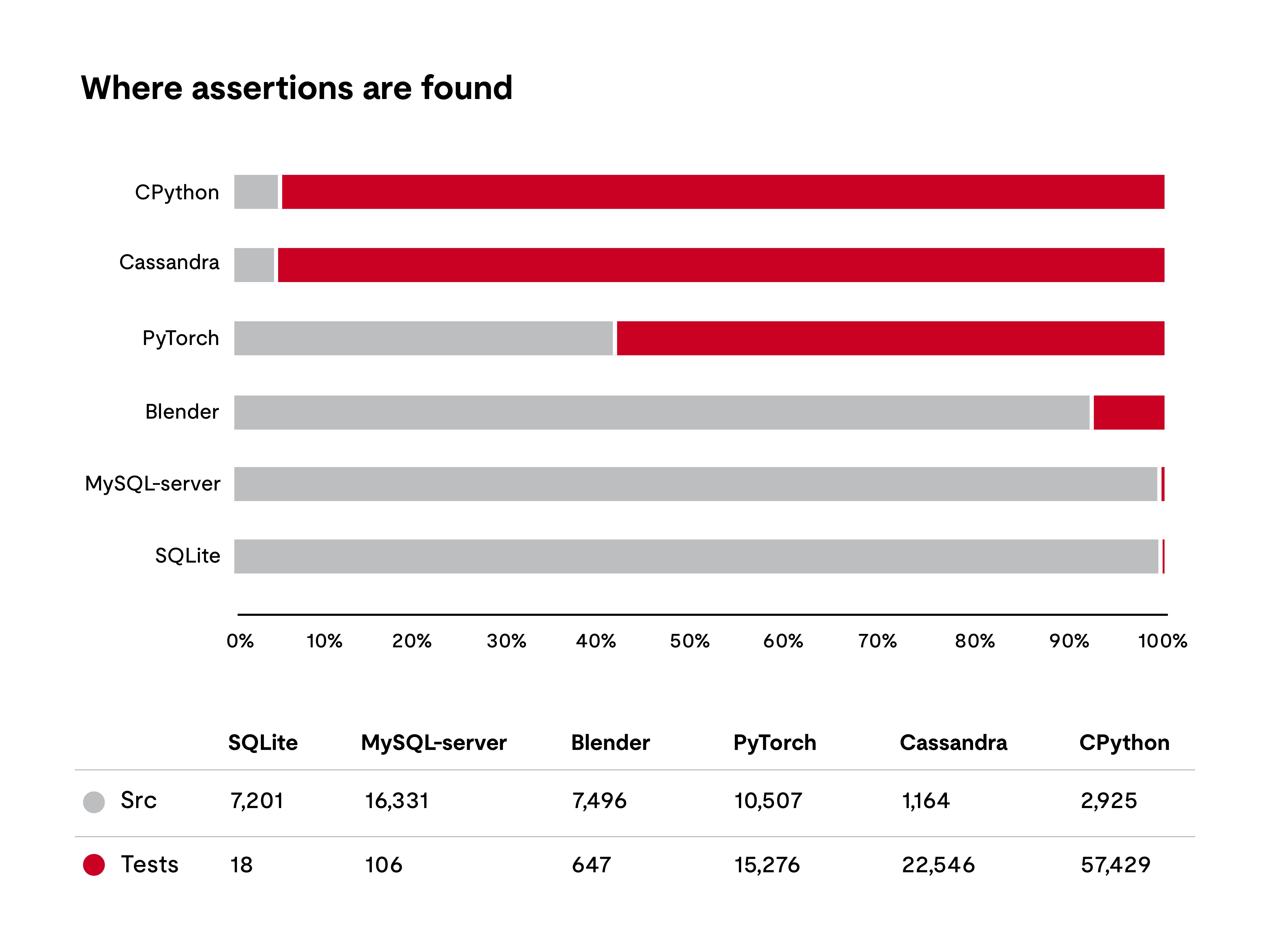

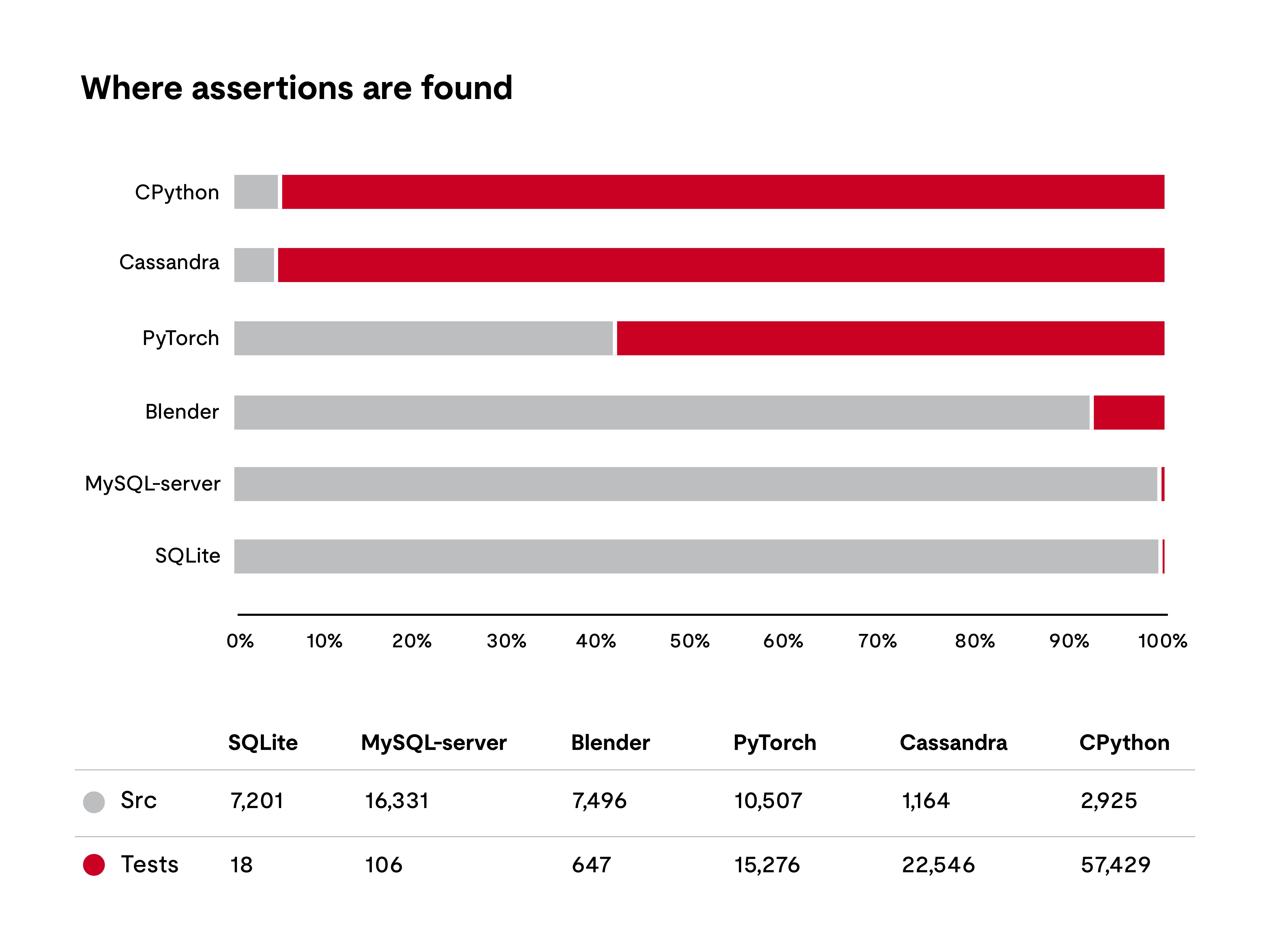

To understand who might back my opinion, I looked for a couple of open-source projects and downloaded their repositories. Then, I manually divided source code into implementation and test folders and ran a script that raffishly skips comments and tallies what counts as an assert (adapted for the found languages LISP, Lua, JavaScript/TypeScript, Python, Java and of course the C family).

As opposed to what I wrote above, I did also look for the various assertions from unit testing frameworks to generate this graph. First, because of my reasoning that plain assert and unit testing share a common ground. Second, it would not have been a very interesting comparison otherwise.

Results

Ordered by increasing percentage of assertions in the implementation, we start with CPython (implemented in C as the name implies) and Cassandra (Java). 95 % of all assertions are to be found in the tests. Nevertheless, I wouldn’t want to debate over the remaining approximately 4,000 assertions in production code.

PyTorch (C++) shows a stark increase to an almost balanced distribution (41 %). The 3D modeler Blender is again implemented in C++ and has 92 % of all assertions in the implementation. To be fair, testing seems to be done exclusively in Python (i.e. fewer lines of code), and there are not that many tests in general. MySQL (another C++ project) on the other hand has quite a few tests, but they dwindle compared to the amount of code and asserts in the implementation.

For SQLite, the situation is distorted. We do find about a dozen assert in the tests, but it is not what the project uses for testing. Instead, there are about 10,000 files with a .test extension. Looking inside, we find a kind of a DSL to allow for plain SQL statements and subsequent tests of their effects/results. So, it seems well tested but has almost all assertions in the implementation.

Conclusion

There is great variety, from most asserts in the implementation over a balanced mix to the majority in tests. However, looking at these examples, even the latter does not strike me as written against a “no asserts in production code” rule. As laid out above, I argue that the statement should rather be “no asserts instead of exceptions.”

As an additional perspective, consider code contracts, a heavy-duty version of assert (since the beginning in Eiffel and more recently available in .NET for instance). They let you specify

- pre- and post-conditions for methods, and

- invariants for classes that hold before/after every method call.

And just like assertions they can—and more likely are due to much higher costs—compiled out for release builds. Unlike assertions, you only put them on your production code, because that’s what they describe.

So, if they are mightier assert and belong in production code, why would you not put the assert there, too? In other words, What are you waiting for? Or, to say it in Python:

assert "assert" in production code.

Get connected with our team

Thanks for your interest in our products and services. Let's collect some information so we can connect you with the right person.