First of all, why is this process important?

In my classes and assessments, I get a lot of questions like, "We do a huge amount of requirements testing; is the extra effort for integration testing really worth it"?

To answer this question, we must first clarify what integration tests mean.

The purpose of integration tests is to check the compliance with the system architecture. This includes checking the interfaces and the dynamic behavior according to SYS.4, the System Architectural Design process.

A better question: "Can requirements tests prove conformity with the system architecture?"

And the answer is: Of course not, simply because these tests test against requirements and not against the architecture.

So far, so good. But what is the added value of testing against the architecture anyway? If the functionality works well, isn't that good enough?

Let's take a closer look: Can a nonconformity with the architecture be detected in requirements testing?

Yes, it can, but there is no guarantee.

You could multiply the number of requirement tests by one thousand, ten thousand, one hundred thousand, but there is still no guarantee.

The cost would explode, but the system could still have errors that could have been avoided simply by testing against the architecture.

Now here's the bottom line: Performing integration testing gives you a more robust system at a lower cost compared to exhaustive requirement testing.

The following are the most important aspects of System Integration and Integration Test in Automotive SPICE®

Define a strategy for integration and integration testing

A strategy is an easy-to-understand instructional description. This is especially important for larger, distributed projects, so that all people know how to do it. This strategy first describes in which sample phases system components become available with new versions. In these cases, integration tests must be performed or repeated. Another aspect the strategy needs to describe is related to the sequence of electromechanical steps that the system uses to perform the task.

Let's take an example: Consider a driver software that transmits a digital value to a digital/analog converter that outputs an analog signal to a motor that sets a mechanical part in motion. The logical test sequence is obvious - the tests would be performed in exactly this order.What would be the point of measuring the acceleration of the mechanical part without first ensuring the correct functioning of the previous steps?

In addition, Automotive SPICE requires a "regression test strategy." Regression testing simply means that if you change something in the system, you make sure that everything that hasn't changed still works well. Applied to the previous example, if you modify the driver software, you usually run all the following integration tests again. But if you modify the mechanical part, you do not need to test the interface between the driver software and the digital/analog converter again.

Provide bidirectional traceability and consistency to the system architecture. Traceability means that for each relevant architectural element, such as an interface, you can localize the corresponding test. If you can do this in reverse, then the traceability is bidirectional.

Now what does consistency mean? In the example with the interface, consistency would require that:

- The interface is linked to the correct test (and not to the test of something else).

- This test is suitable to test the interface completely.

If this is not the case, additional tests must be linked. Consistency also requires that the tests actually test the interface correctly. The goal, in other words, is: no faulty tests.

Summarize and communicate the test results. This is usually referred to as a test summary report. And this summary report should be sent to the people who need this information, such as the development team, project manager, quality engineer and so on.

Let's take a closer look at how this report should look. As the name suggests, it should summarize the results and hide unnecessary details. What is the main message that the summary report should convey? It should, of course, show compliance with the system architecture.

Here’s a counterexample often seen in assessments.

The report contains pie charts showing the 139 tests that should have been performed, of which 11 could not be performed and 10 failed. That's it. There is no information as to why the 11 tests could not be carried out and what the risks are.

There is also no information about how big the problem is with the 10 failed tests.

Nor does the report show compliance with the system architecture.

In fact, the system architecture is not mentioned at all.

They would have to relate the pie chart to the 85 architectural elements, not just to the 139 tests. This, of course, leads to weaknesses in the assessment.

System Integration and Integration Test – the process according to Automotive SPICE®

The purpose of the System Integration and Integration Test process is to integrate the system items to produce an integrated system consistent with the system architectural design. It is also intended to verify that the system items are tested to provide evidence for compliance of the integrated system items with the system architectural design, including the interfaces between system items.

Best Practice (BP) 1: Develop a strategy for integrating the system items consistent with the project plan and the release plan. Identify system items based on the system architectural design and define a sequence for integrating them.

BP2: Develop a strategy for testing the integrated system items following the integration strategy. This includes a regression test strategy for re-testing integrated system items if a system item is changed.

BP3: Develop the test specification for system integration test including the test cases for each integration step of a system item according to the system integration test strategy. The test specification shall be suitable to provide evidence for compliance of the integrated system items with the system architectural design.

- NOTE 1: The interface descriptions between system elements are an input for the system integration test cases.

- NOTE 2: Compliance to the architectural design means that the specified integration tests are suitable to prove that the interfaces between the system items fulfill the specification given by the system architectural design.

- NOTE 3: The system integration test cases may focus on the correct signal flow between system items the timeliness and timing dependencies of signal flow between system items the correct interpretation of signals by all system items using an interface the dynamic interaction between system items

- NOTE 4: The system integration test may be supported using simulation of the environment (e.g. Hardware-in-the-Loop simulation, vehicle network simulations, digital mock-up).

BP4: Integrate the system items to an integrated system according to the system integration strategy.

- NOTE 5: The system integration can be performed step-wise integrating system items (e.g. the hardware elements as prototype hardware, peripherals (sensors and actuators), the mechanics and integrated software to produce a system consistent with the system architectural design.

BP5: Select test cases from the system integration test specification. The selection of test cases shall have sufficient coverage according to the system integration test strategy and the release plan.

BP6: Perform the system integration test using the selected test cases. Record the integration test results and logs.

- NOTE 6: See SUP.9 for handling of non-conformances.

BP7: Establish bidirectional traceability between elements of the system architectural design and test cases included in the system integration test specification. Establish bidirectional traceability between test cases included in the system integration test specification and system integration test results.

- NOTE 7: Bidirectional traceability supports coverage, consistency and impact analysis.

BP8: Confirm consistency between elements of the system architectural design and test cases included in the system integration test specification.

- NOTE 8: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

BP9: Summarize the system integration test results and communicate them to all affected parties.

- NOTE 9: Providing all necessary information from the test case execution in a summary enables other parties to judge the consequences.

What is the benefit of System Integration and Integration Test?

The system is gradually integrated and tested to find errors in the interactions between the elements of the system before System Testing (SYS.5) begins. All interfaces and dynamic behaviors are tested.

What is the content of the System Integration and Integration Test Process?

An integration strategy and integration test strategy are defined, along with a regression testing strategy. The strategy aligns with the system architecture and product release plan (BP1, BP2).

Test specifications for integration are created and their traceability and consistency to the system architecture is established (BP3, BP7, BP8).

The system elements are integrated in accordance with the strategy (BP4), system integration tests and regression tests are performed (BP5, BP6) and the results are summarized and reported (BP9).

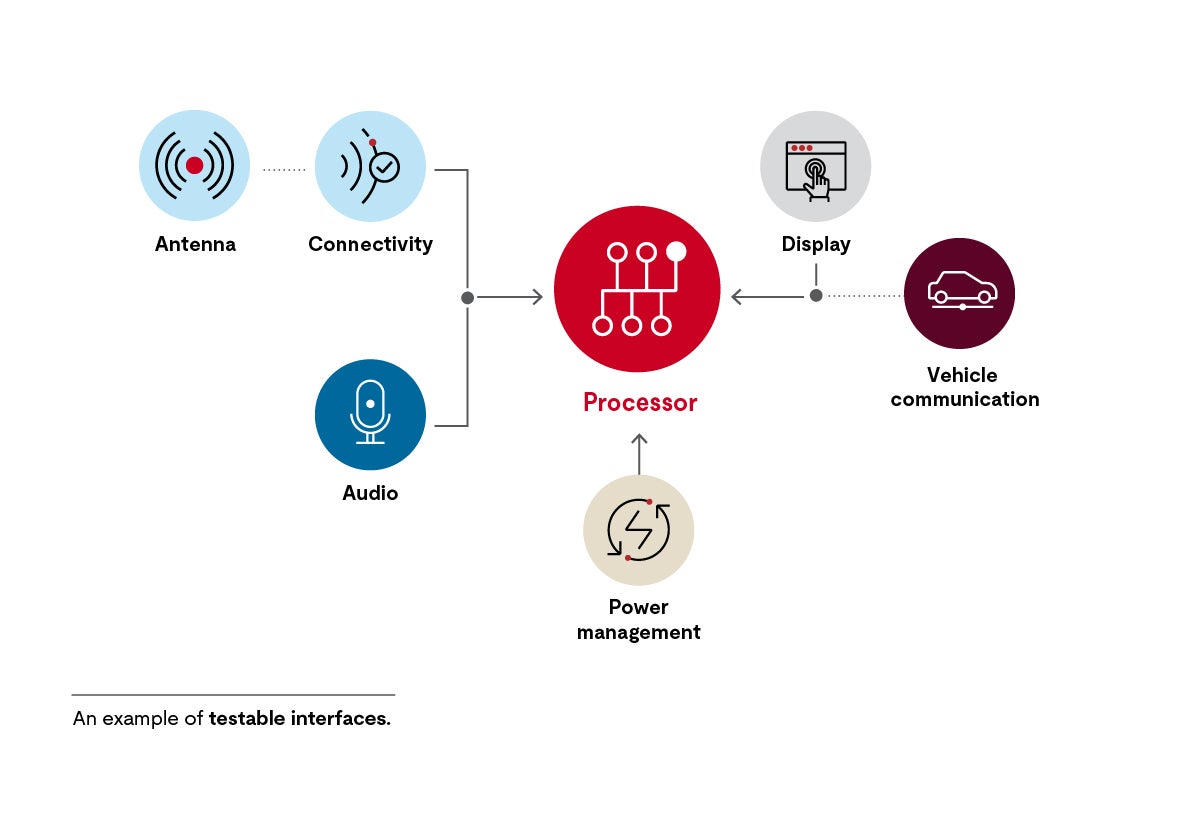

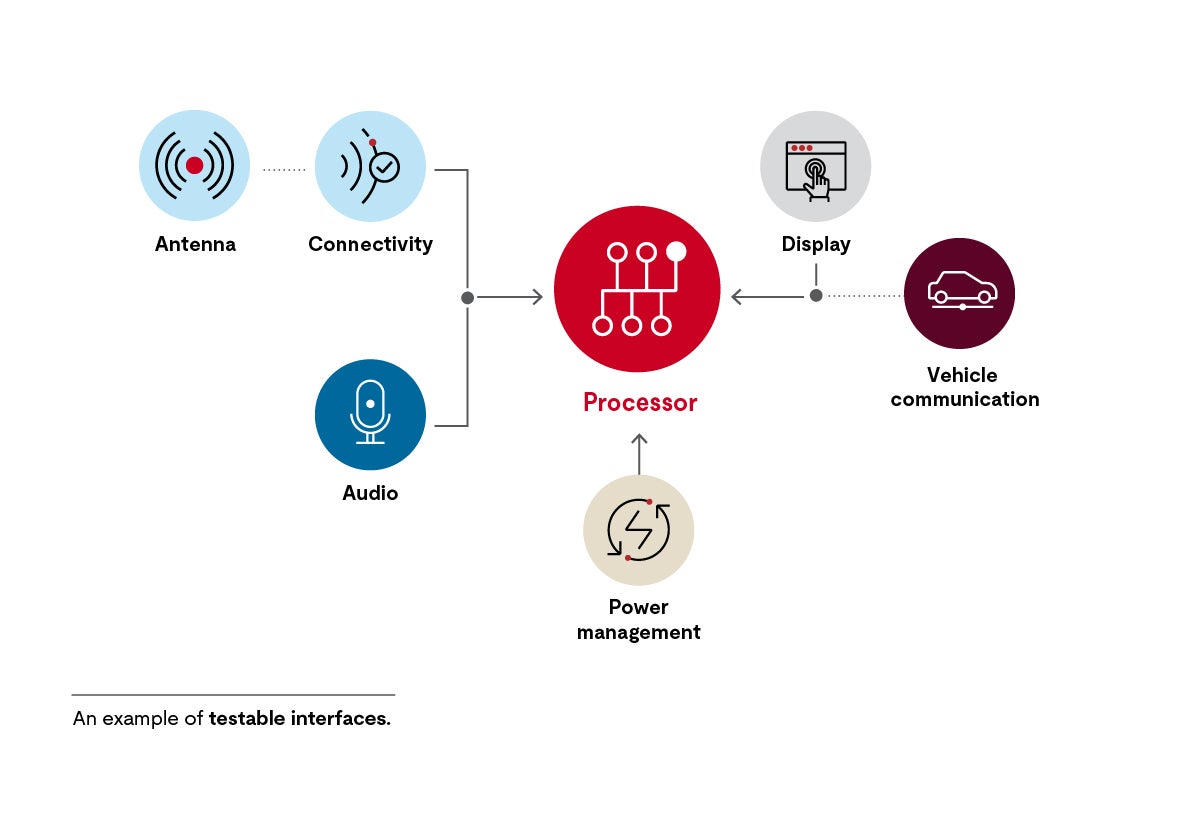

Example of testable interfaces

Experiences, problems and hints:

System integration means the integration of the system components into a complete system, not the integration into the vehicle. The system integration sequence is often unstructured with no defined approach or order.

There is often confusion about what system integration testing actually is. Each relevant interface (internal and external) and dynamic behavior must be tested. For example hardware-software integration, memory test, test interface components with the software, internal and external communication, testing the flashing under various conditions.

The dynamic behavior of the system is often not considered in integration testing.

Especially in safety-related systems, reaction times need to be tested.

The system integration test is sometimes performed in joint test runs with the system test. This is no problem as long as testing of all relevant interfaces and dynamic behaviors can be demonstrated.

System integration often involves the electrical design lead, when they receive new board samples and must prove that the board works correctly with the software.

This is a good place from which to start to build a system integration and test strategy and procedure.

System integration testing must confirm that the following elements of the system architecture are tested with enough coverage: the hardware software interfaces and the dynamic behavior. Also, resource consumption must be measured against objectives defined in SYS.3.

When evaluating system level dynamic behavior, it is important to consider how the system reacts to the major vehicle modes such as ignition on/off, delayed off, crank, etc.

Learn more about Automotive SPICE

Participate in one of our Automotive SPICE courses.

About the author

Thomas Liedtke is a computer scientist by background. After completing his PhD at the University of Stuttgart, he entered the telecommunications industry. Over the course of 14 years at Alcatel-Lucent, he successfully spearheaded a variety of projects and managed several departments. He entered the field of consulting over a decade ago and has been sharing his experience with clients in a variety of industries ever since, primarily in the areas of safety, security, privacy and project management.

He has been involved with several committees, particularly the working group for Automotive Cybersecurity at German Electrical and the VDA Cybersecurity Work Group organized by the (DIN standard NA052-00-32-11AK and ISO standard TC22/SC32/WG11).